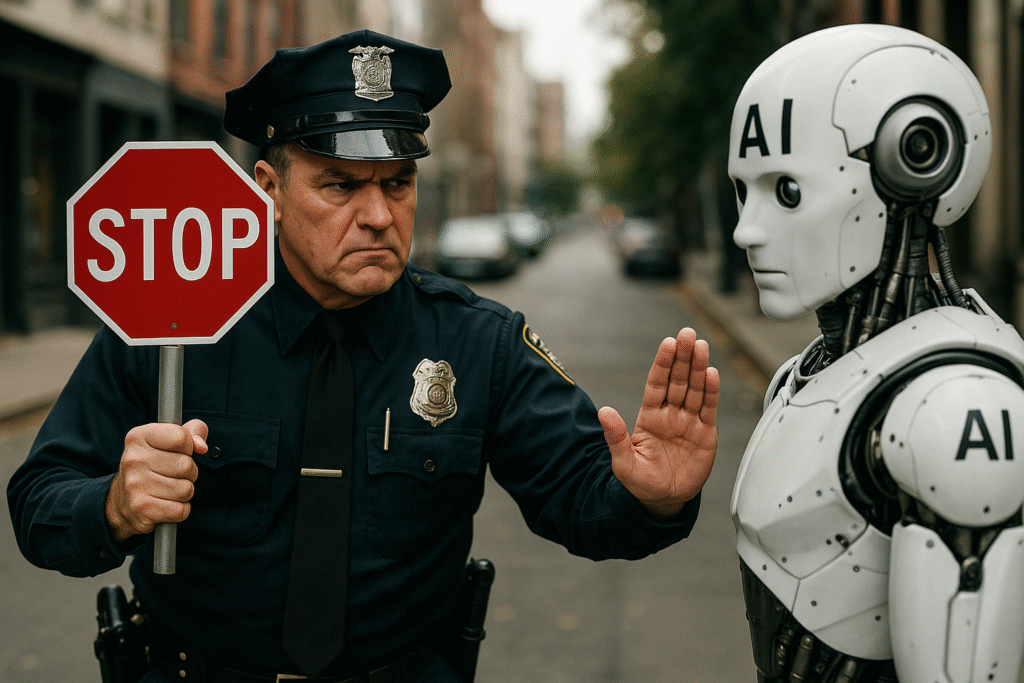

Cloudflare is stepping up to take on one of the biggest problems facing the modern internet: AI bots stealing content. The company, which powers a massive portion of the web, now blocks AI crawlers from scraping websites without permission. It is on by default.

That is right. New domains signing up with Cloudflare will automatically block AI crawlers unless the site owner says otherwise. Even existing users can choose whether to allow bots based on what those bots plan to do with the content, such as training models or powering search tools.

Nerds might not need antivirus. But for your family’s PC, Bitdefender is a solid choice .

For years, websites have relied on the classic search engine model. You post content, Google indexes it, and users find their way back to you. Traffic flows in, and with it comes ad revenue, subscriptions, and visibility. But that formula has been upended by AI. Language models now scrape entire websites, consume articles, and spit out answers without ever sending users back to the source.

That hurts publishers. Badly.

I am not talking about hypothetical harm. I mean fewer clicks, less revenue, and declining incentives to create new content. If AI bots just take what they want and never link back, why bother making anything at all?

Cloudflare’s default block is an attempt to reverse that damage. The idea is simple: force AI companies to play by new rules. If they want access to original work, they need to ask and in many cases, pay.

Matthew Prince, Cloudflare’s CEO, summed it up well. If the Internet is going to survive the age of AI, we need to give publishers the control they deserve and build a new economic model that works for everyone, he said.

And I agree. Content creators, myself included, have been losing control over our work for years now. Seeing Cloudflare step up like this gives me a bit of hope that the tide is starting to turn.

This move is not happening in a vacuum. Dozens of major publishers are backing it, including TIME, The Atlantic, Condé Nast, Gannett, Dotdash Meredith, BuzzFeed, and even Reddit. These companies rely on original content, and they have all expressed concern over the way AI models are trained today.

Reddit’s CEO, Steve Huffman, made a solid point. AI bots need to identify themselves and be honest about what they are doing. Cloudflare’s new system helps with that. It provides a framework for bots to disclose their purpose and for website owners to accept or deny them based on that.

It is a win for transparency. And hopefully, for fairness too.

This is not about halting progress or blocking AI altogether. It is about fixing a broken value exchange. If AI is built on human creativity, then humans and the platforms that support them deserve a say in how that content is used.

I have personally grown tired of seeing bots pull from articles I worked hard to write, with no credit or traffic in return. If the web is going to remain a place worth publishing on, we need changes like this.

Cloudflare’s decision is obviously just the beginning. However, it is a smart first step. It pushes the industry toward a permission-based model that protects creators without shutting down innovation.

Quite frankly, it’s about damn time.

The “new economic model” that publishers may resort to is paywalls.