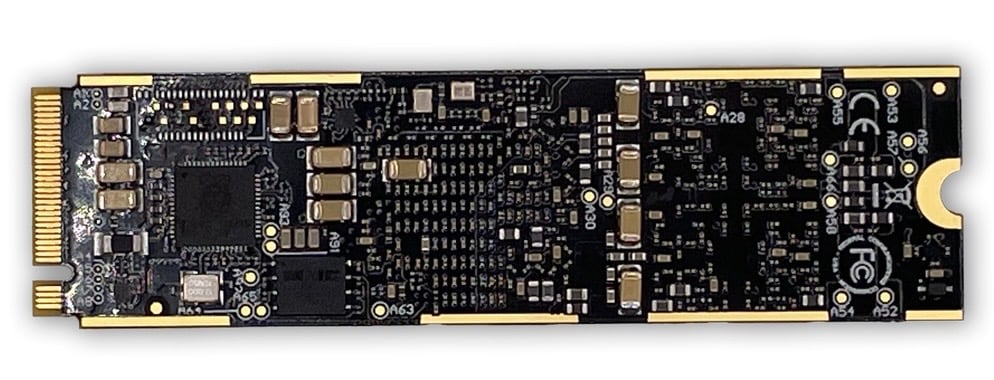

Axelera AI has introduced the Metis M.2 Max, a compact accelerator card designed to bring serious AI horsepower to the edge. Unlike many M.2 cards that struggle to match the performance of larger PCIe solutions, this one promises to deliver desktop-level inference in a slim 2280 module.

The Metis M.2 Max is not a storage drive, even though it does look like one. It’s an AI accelerator card designed to offload and speed up inference workloads such as running large language models, vision transformers, and other neural networks.

By handling the specialized math that drives modern AI, it reduces the burden on CPUs and GPUs while delivering faster results in a compact, low-power form factor. That makes it well suited for edge deployments where space, efficiency, and security matter more than raw server-class horsepower.

The company claims Metis M.2 Max doubles memory bandwidth over its predecessor while also slimming down its profile for easier integration. It comes with up to 16GB of memory and advanced thermal management, which should help keep things stable in demanding workloads. Edge deployments often face harsh conditions, and Axelera says it will offer both standard (-20°C to +70°C) and extended (-40°C to +85°C) temperature versions.

Performance gains target the workloads everyone cares about right now: large language models and vision transformers. Axelera says the new card delivers a 33 percent uplift in convolutional neural networks and can process double the tokens per second for LLMs and vision-language models compared to the prior generation. Despite this boost, it stays within an average power range of just 6.5 watts.

Security is a major focus, thankfully. Metis M.2 Max integrates a Root of Trust with secure boot and upgrade support, ensuring only authenticated firmware runs on a system. That’s important for industries such as manufacturing, healthcare, and public safety, where compromised devices can have major consequences.

Developers won’t be left struggling with integration. The card works with Axelera’s Voyager SDK, which supports proprietary and industry-standard models. For system builders, an optional low-profile heatsink helps reduce height by 27 percent, opening up deployment in tighter spaces. Power- and thermal-constrained users can also take advantage of an onboard power probe that automatically balances performance to match specific environments.

Shipping begins in Q4 2025, though pricing hasn’t been announced. For those experimenting with edge AI and Linux-based inference at scale, the Metis M.2 Max could be a practical way to bring transformer models and LLMs closer to real-world deployments.