AI might be the future of software development, but a new report suggests we’re not quite ready to take our hands off the wheel. Veracode has released its 2025 GenAI Code Security Report, and the findings are pretty alarming. Out of 80 carefully designed coding tasks completed by over 100 large language models, nearly 45 percent of the AI-generated code contained security flaws.

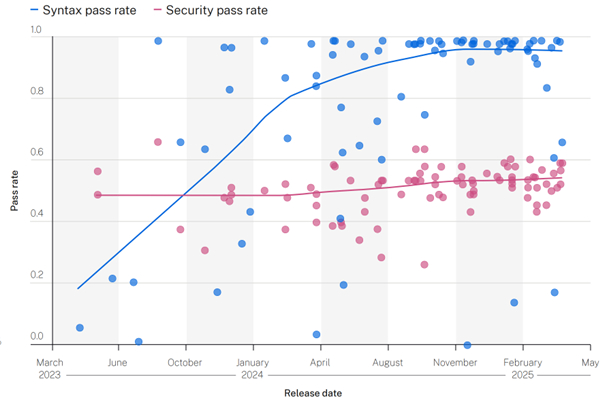

That’s not a small number. These are not minor bugs, either. We’re talking about real vulnerabilities, with many falling under the OWASP Top 10, which highlights the most dangerous issues in modern web applications. The report found that when AI was given the option to write secure or insecure code, it picked the wrong path nearly half the time. And perhaps more concerning, things aren’t getting better. Despite improvements in generating functional code, these models show no progress in writing more secure code.

I’m excited about AI in development. It really is the future. But let’s be real, folks, human developers are still needed. You can’t blindly copy and paste from an AI and hope it’s safe. Someone has to be reviewing this stuff. That’s especially true now that developers are relying more on what’s being called “vibe coding,” where you let the AI do its thing without giving it any real security guidelines. In that setup, you’re basically trusting a chatbot to make security decisions, and according to Veracode, it gets it wrong far too often.

The company’s research team used a series of code-completion tasks tied to known vulnerabilities, based on the MITRE CWE list. Then they ran the AI-generated code through Veracode Static Analysis. The results speak for themselves. Java was the riskiest language, with a failure rate of over 70 percent. Python, JavaScript, and C# weren’t much better, each failing between 38 and 45 percent of the time. When it came to specific weaknesses, like cross-site scripting and log injection, the failure rates shot up to 86 and 88 percent.

Further Reading:

- Microsoft says it’s thriving, so why is the Windows-maker still laying people off?

- Kubuntu Focus NX GEN 3 is a tiny Linux desktop with big power and blazing fast storage

- Intel kills Clear Linux OS as support ends without warning

- Seagate launches 30TB Exos and IronWolf Pro hard drives as demand for on-prem AI and sovereign data surges

It’s not just that vulnerabilities are increasing. The report also points out that AI is making it easier for attackers to find and exploit them. Now, even low-skilled hackers can use AI tools to scan systems, identify flaws, and whip up exploit code. That shifts the entire security landscape, putting defenders on their back foot.

Veracode is urging organizations to get ahead of this. Its advice is pretty straightforward… bake security into every part of the development pipeline. That means using static analysis early, integrating tools like Veracode Fix for real-time remediation, and even building security awareness into AI agent workflows. There’s also a push to use Software Composition Analysis to sniff out risky open-source components and set up a “package firewall” to block known bad packages before they do any damage.

One surprising note in the research is that bigger AI models didn’t necessarily perform better than smaller ones. That suggests this is not a problem of scale, but rather something built into how these models are trained and how they handle security-related logic.

From where I sit, the takeaway is quite simple. Yes, AI can help us code faster. But if we want to code smarter (and safer) humans still need to stay in the loop.