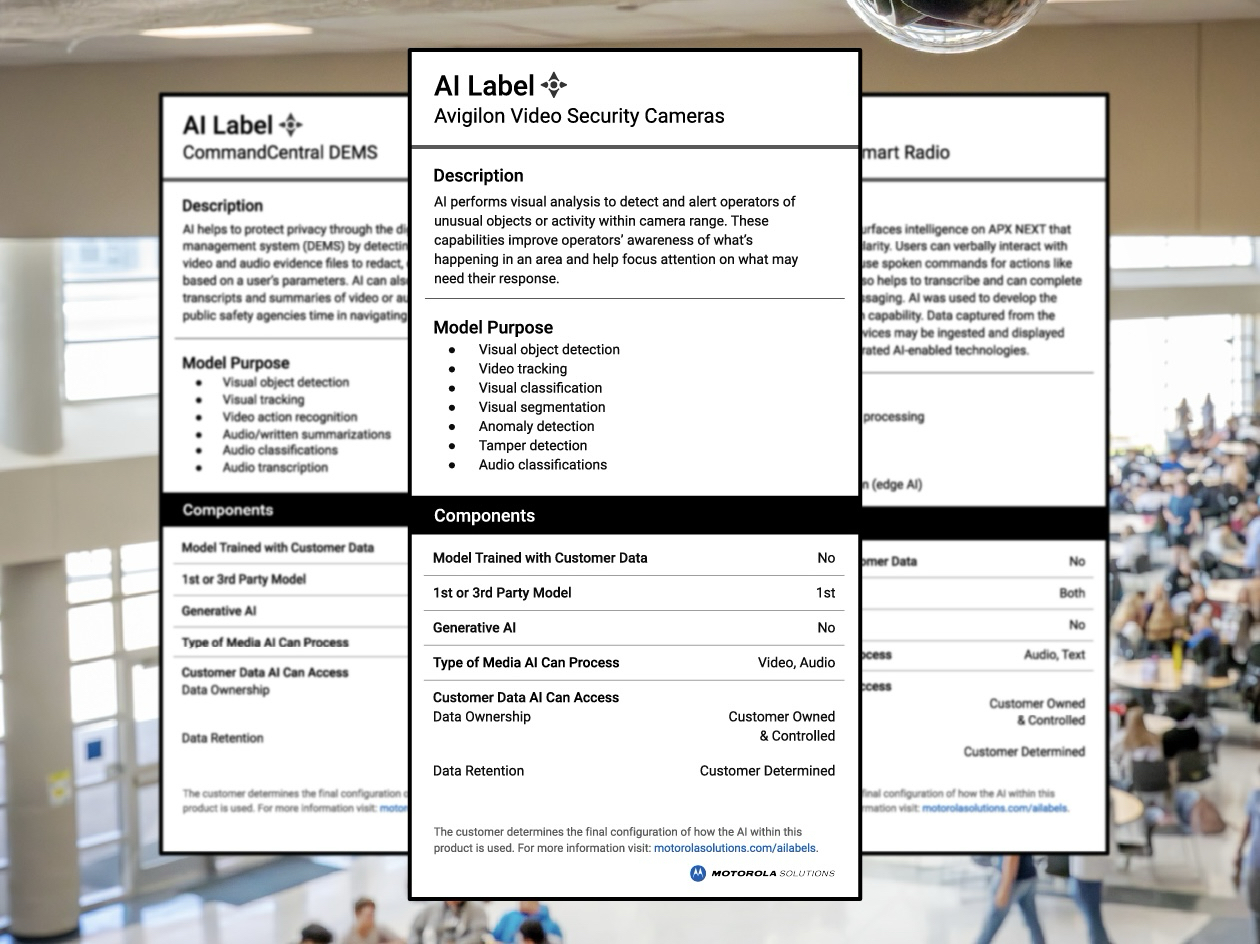

Motorola Solutions is trying something new with its AI-powered security tools. The company is introducing what it calls “AI nutrition labels,” designed to help users understand how artificial intelligence is being used inside its products. It’s a bit like the labels on food boxes, but instead of calories and sugar, you’re getting insight into algorithms, data handling, and human oversight.

Each label will outline the type of AI being used, what it does, who owns the data, and whether there are any human checks in place. Motorola says it’s doing this to improve transparency and build trust with customers who rely on its technology for public safety and enterprise security.

I’ll be honest, folks, I rather like the idea. Giving people a clearer look into the guts of AI systems is a good step. But here’s the catch: Motorola is making up its own labeling system. Without a consistent standard across the industry, this becomes more of a branding exercise than a real move toward accountability.

Look, If every company makes their own version, comparing them is going to be a mess. Ideally, these labels should follow a common format, maybe even one backed by government regulation. That way, users and watchdogs could actually measure one product against another and hold companies to something more than marketing talk.

Motorola says its AI systems are meant to assist humans, not replace them. The company emphasizes that its tools help people make faster, more informed decisions during high-pressure situations. That’s a reasonable claim, especially in emergency response or security scenarios where every second counts.

But the real value in these new labels will depend on how much detail Motorola is actually willing to share. So far, there’s no independent auditing or open access to the models themselves.

The effort is being guided by the Motorola Solutions Technology Advisory Committee, which the company describes as its internal “technical conscience.” This group is supposed to help evaluate the ethics and implications of new AI features before they are rolled out. Whether that committee has any real independence or teeth is still unclear.

Still, this is the first time a major public safety company has tried something like this. In a world where AI decisions are often hidden behind layers of corporate secrecy, even a small amount of transparency is better than nothing. It might not fix the trust problem, but it could at least spark a conversation.

For those interested in seeing the labels or learning more about how they work, Motorola has set up a landing page at motorolasolutions.com/ailabels.